This month I’ll handle a facet of the ethics of synthetic intelligence (AI) and analytics that I feel many individuals do not absolutely recognize. Particularly, the ethics of a given algorithm can differ based mostly on the particular scope and context of the deployment being proposed. What is taken into account unethical inside one scope and context could be completely superb in one other. I am going to illustrate with an instance after which present steps you possibly can take to verify your AI deployments keep moral.

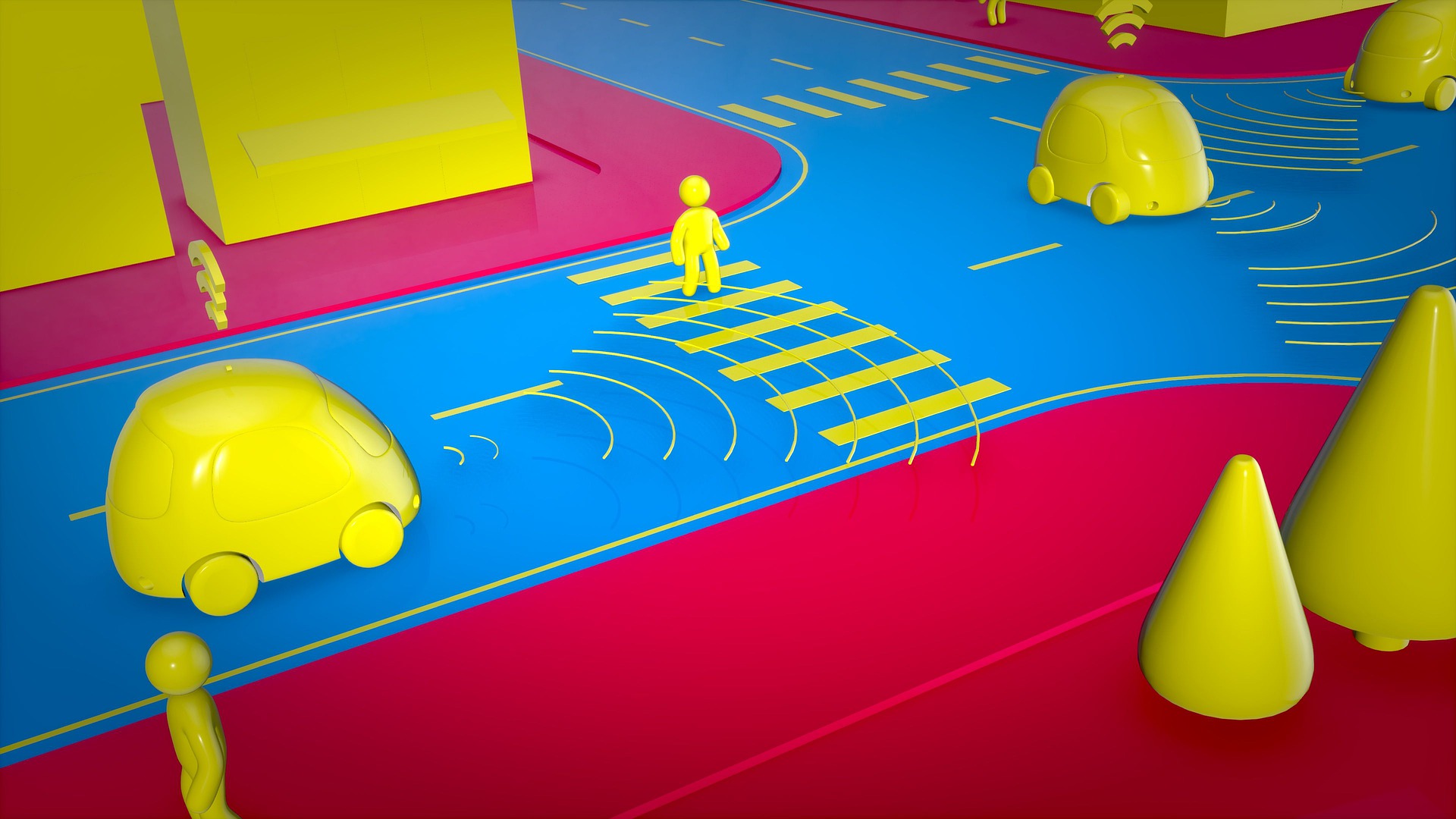

Why Autonomous Automobiles Aren’t But Moral For Extensive Deployment

There are restricted assessments of absolutely autonomous, driverless vehicles occurring world wide right now. Nonetheless, the vehicles are largely restricted to low-speed metropolis streets the place they’ll cease shortly if one thing uncommon happens. After all, even these low-speed vehicles aren’t with out points. For instance, there are stories of autonomous vehicles being confused and stopping once they needn’t after which inflicting a site visitors jam as a result of they will not begin shifting once more.

We do not but see vehicles working in full autonomous mode on larger velocity roads and in advanced site visitors, nevertheless. That is largely as a result of so many extra issues can go improper when a automobile is shifting quick and is not on a well-defined grid of streets. If an autonomous automobile encounters one thing it would not know the way to deal with going 15 miles per hour, it might safely slam on the brakes. If in heavy site visitors touring at 65 miles per hour, nevertheless, slamming on the breaks may cause a large accident. Thus, till we’re assured that autonomous vehicles will deal with nearly each situation safely, together with novel ones, it simply will not be moral to unleash them at scale on the roadways.

Some Large Autos Are Already Totally Autonomous – And Moral!

If vehicles cannot ethically be absolutely autonomous right now, actually big farm tools with spinning blades and large dimension cannot, proper? Fallacious! Producers resembling John Deere have absolutely autonomous farm tools working in fields right now. You possibly can see one instance within the image beneath. This huge machine rolls by way of fields by itself and but it’s moral. Why is that?

On this case, whereas the tools is very large and harmful, it’s in a subject all by itself and shifting at a comparatively low velocity. There are not any different autos to keep away from and few obstacles. If the tractor sees one thing it is not certain the way to deal with, it merely stops and alerts the farmer who owns it through an app. The farmer appears to be like on the picture and decides — if what’s within the image is only a puddle reflecting clouds in an odd approach, the tools could be informed to proceed. If the image reveals an injured cow, the tools could be informed to cease till the cow is attended to.

This autonomous automobile is moral to deploy for the reason that tools is in a contained surroundings, can safely cease shortly when confused, and has a human associate as backup to assist deal with uncommon conditions. The scope and context of the autonomous farm tools is completely different sufficient from common vehicles that the ethics calculations result in a unique conclusion.

Placing The Scope And Context Idea Into Apply

There are a couple of key factors to remove from this instance. First, you possibly can’t merely label a selected sort of AI algorithm or software as “moral” or “unethical”. You additionally should additionally contemplate the particular scope and context of every deployment proposed and make a contemporary evaluation for each particular person case.

Second, it’s essential to revisit previous choices repeatedly. As autonomous automobile expertise advances, for instance, extra forms of autonomous automobile deployments will transfer into the moral zone. Equally, in a company surroundings, it could possibly be that up to date governance and authorized constraints transfer one thing from being unethical to moral – or the opposite approach round. A choice based mostly on ethics is correct for a cut-off date, not forever.

Lastly, it’s essential to analysis and contemplate all of the dangers and mitigations at play as a result of a scenario may not be what a primary look would recommend. For instance, most individuals would assume autonomous heavy equipment to be an enormous threat in the event that they have not thought by way of the detailed realities as outlined within the prior instance.

All of this goes to bolster that making certain moral deployments of AI and different analytical processes is a steady and ongoing endeavor. You need to contemplate every proposed deployment, at a second in time, whereas accounting for all identifiable dangers and advantages. Which means that, as I’ve written before, you should be intentional and diligent about contemplating ethics each step of the best way as you intend, construct, and deploy any AI course of.

Initially posted within the Analytics Matters newsletter on LinkedIn

The put up Same AI + Different Deployment Plans = Different Ethics appeared first on Datafloq.