Picture by Writer

Everyone seems to be specializing in constructing higher LLMs (giant language fashions), whereas Groq focuses on the infrastructure aspect of AI, making these giant fashions quicker.

On this tutorial, we’ll study Groq LPU Inference Engine and methods to use it regionally in your laptop computer utilizing API and Jan AI. We may also combine it in VSCode to assist us generate code, refactor it, doc it, and generate testing items. We will likely be creating our personal AI coding assistant free of charge.

What’s Groq LPU Inference Engine?

The Groq LPU (Language Processing Unit) Inference Engine is designed to generate quick responses for computationally intensive functions with a sequential part, corresponding to LLMs.

In comparison with CPU and GPU, LPU has larger computing capability, which reduces the time it takes to foretell a phrase, making sequences of textual content to be generated a lot quicker. Furthermore, LPU additionally offers with reminiscence bottlenecks to ship higher efficiency on LLMs in comparison with GPUs.

In brief, Groq LPU know-how makes your LLMs tremendous quick, enabling real-time AI functions. Learn the Groq ISCA 2022 Paper to study extra about LPU structure.

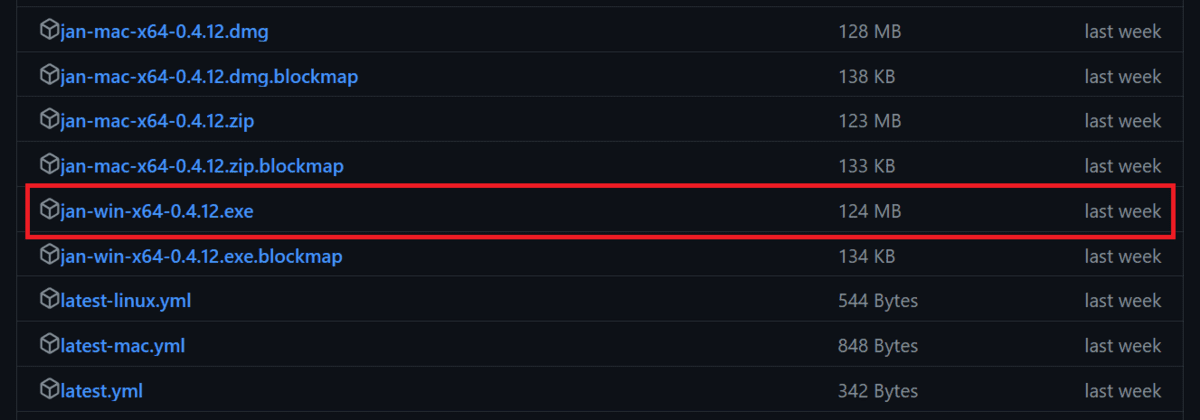

Putting in Jan AI

Jan AI is a desktop software that runs open-source and proprietary giant language fashions regionally. It’s obtainable to obtain for Linux, macOS, and Home windows. We are going to obtain and set up Jan AI in Home windows by going to the Releases · janhq/jan (github.com) and clicking on the file with the `.exe` extension.

If you wish to use LLMs regionally to reinforce privateness, learn the 5 Ways To Use LLMs On Your Laptop weblog and begin utilizing top-of-the-line open-source Language fashions.

Creating the Groq Cloud API

To make use of Grog Llama 3 in Jan AI, we want an API. To do that, we’ll create a Groq Cloud account by going to https://console.groq.com/.

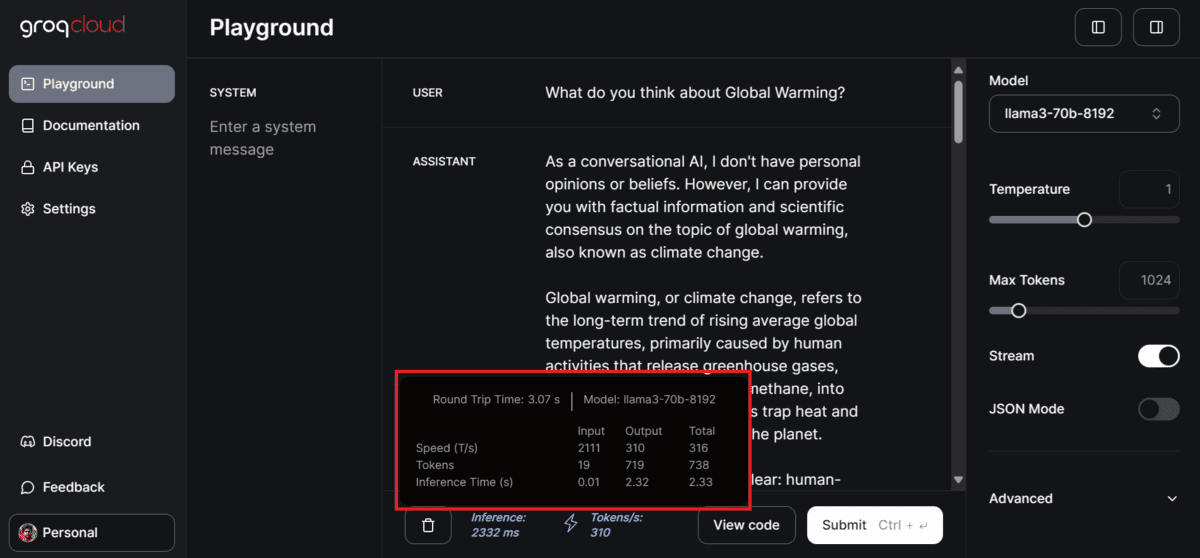

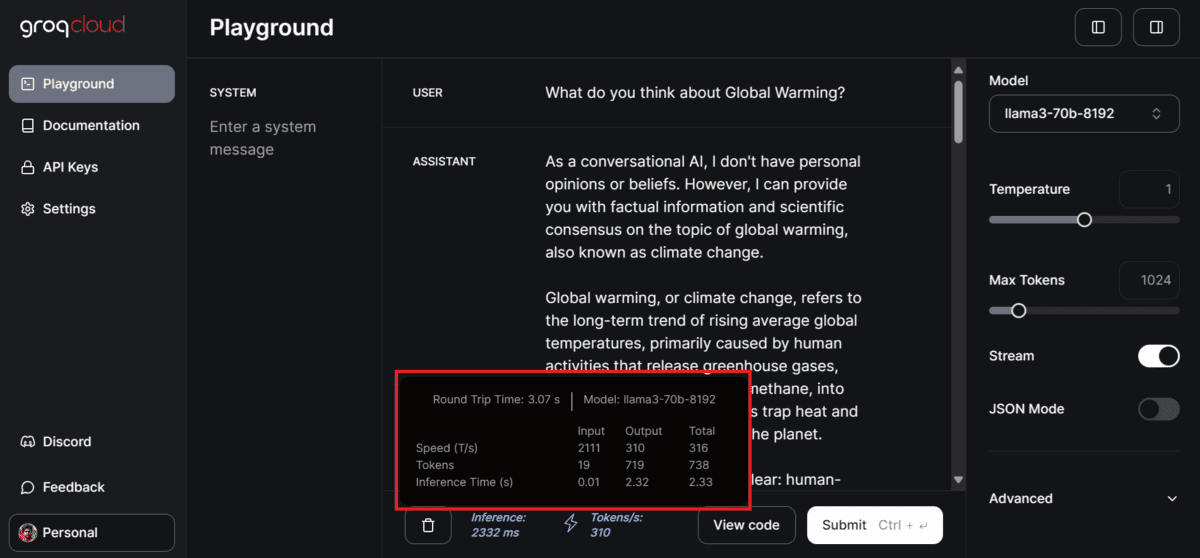

If you wish to take a look at the assorted fashions provided by Groq, you are able to do that with out establishing something by going to the “Playground” tab, choosing the mannequin, and including the consumer enter.

In our case, it was tremendous quick. It generated 310 tokens per second, which is by far probably the most I’ve seen in my life. Even Azure AI or OpenAI can’t produce this kind of consequence.

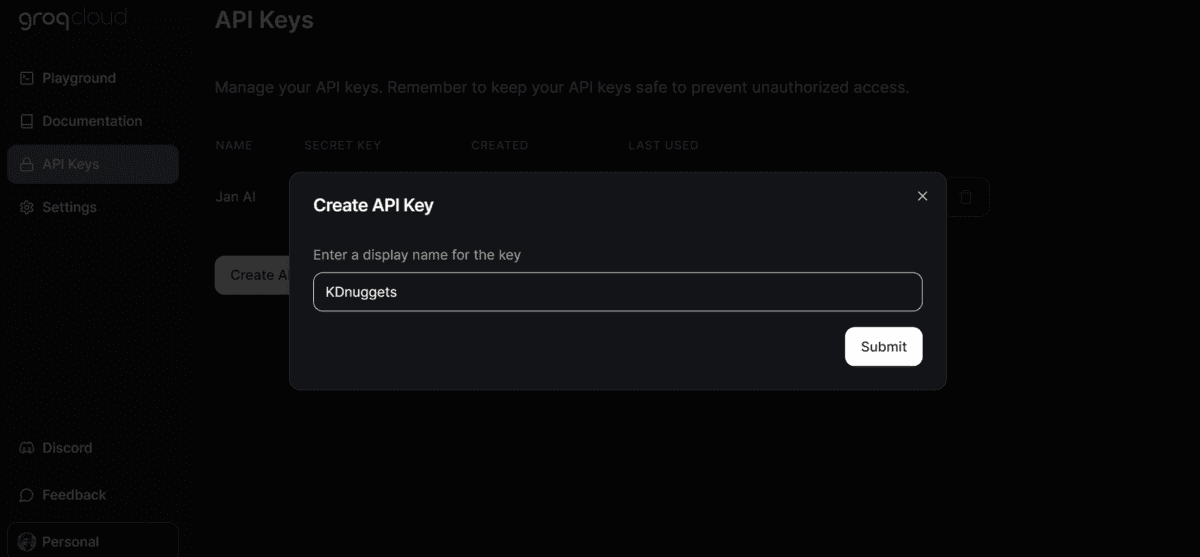

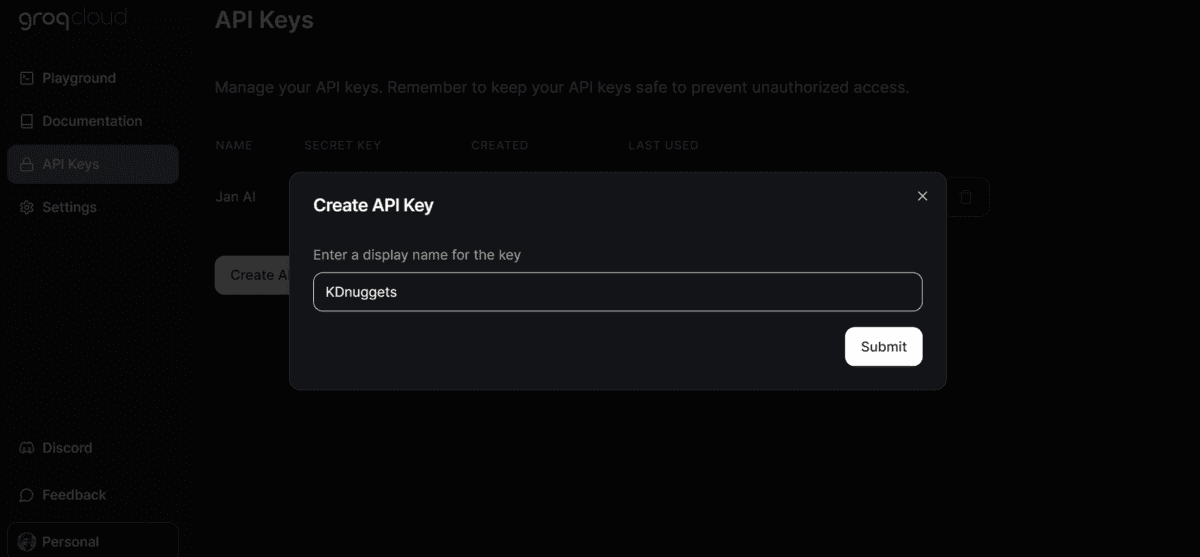

To generate the API key, click on on the “API Keys” button on the left panel, then click on on the “Create API Key” button to create after which copy the API key.

Utilizing Groq in Jan AI

Within the subsequent step, we’ll paste the Groq Cloud API key into the Jan AI software.

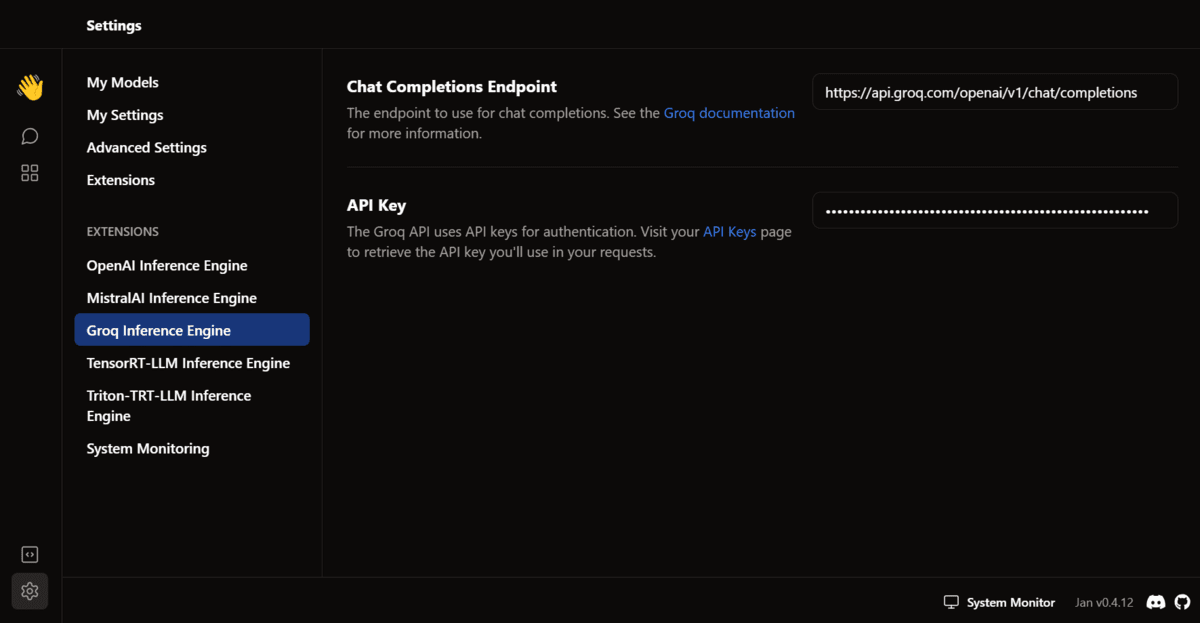

Launch the Jan AI software, go to the settings, choose the “Groq Inference Engine” possibility within the extension part, and add the API key.

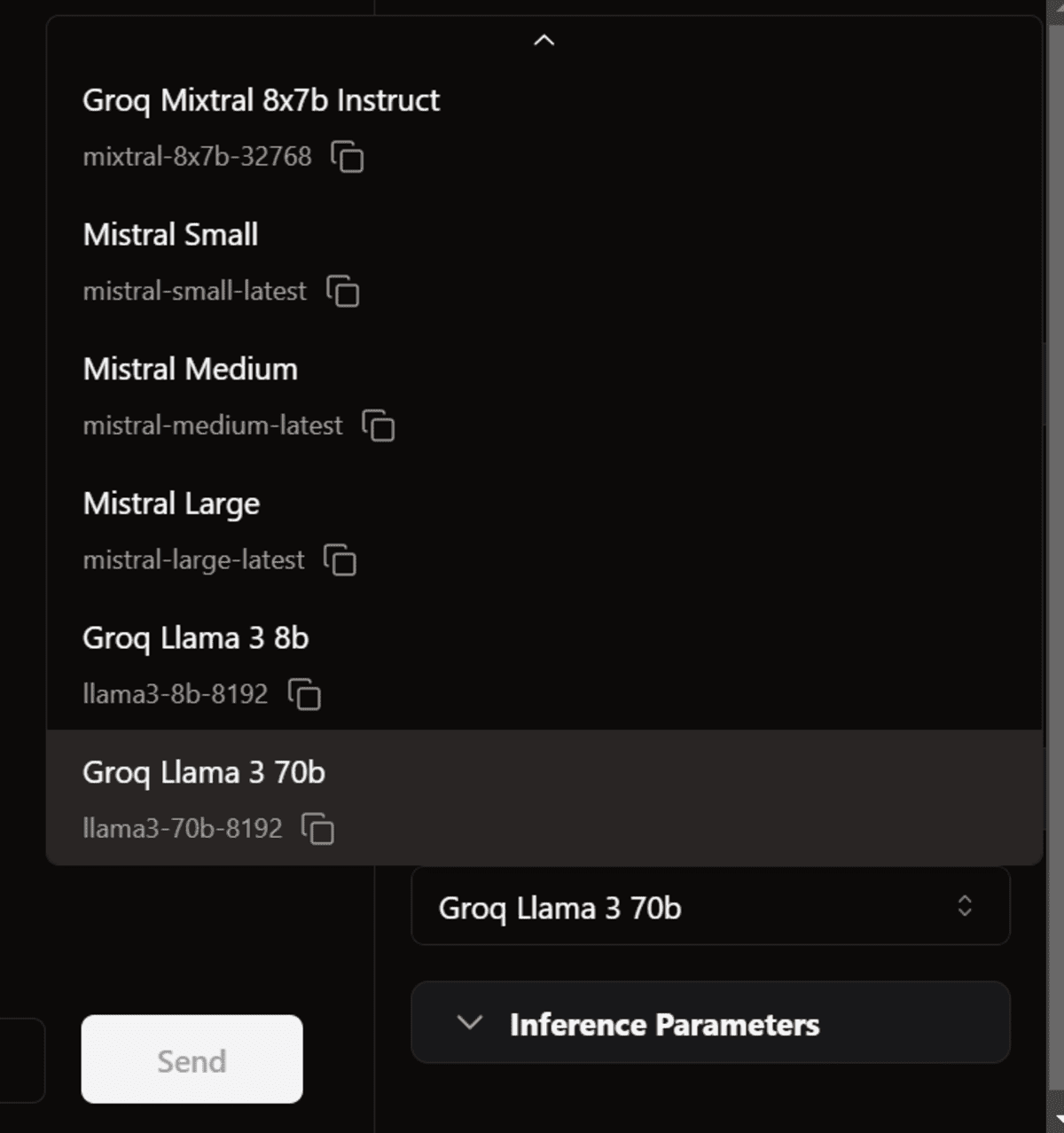

Then, return to the thread window. Within the mannequin part, choose the Groq Llama 3 70B within the “Distant” part and begin prompting.

The response era is so quick that I can not even sustain with it.

Notice: The free model of the API has some limitations. Go to https://console.groq.com/settings/limits to study extra about them.

Utilizing Groq in VSCode

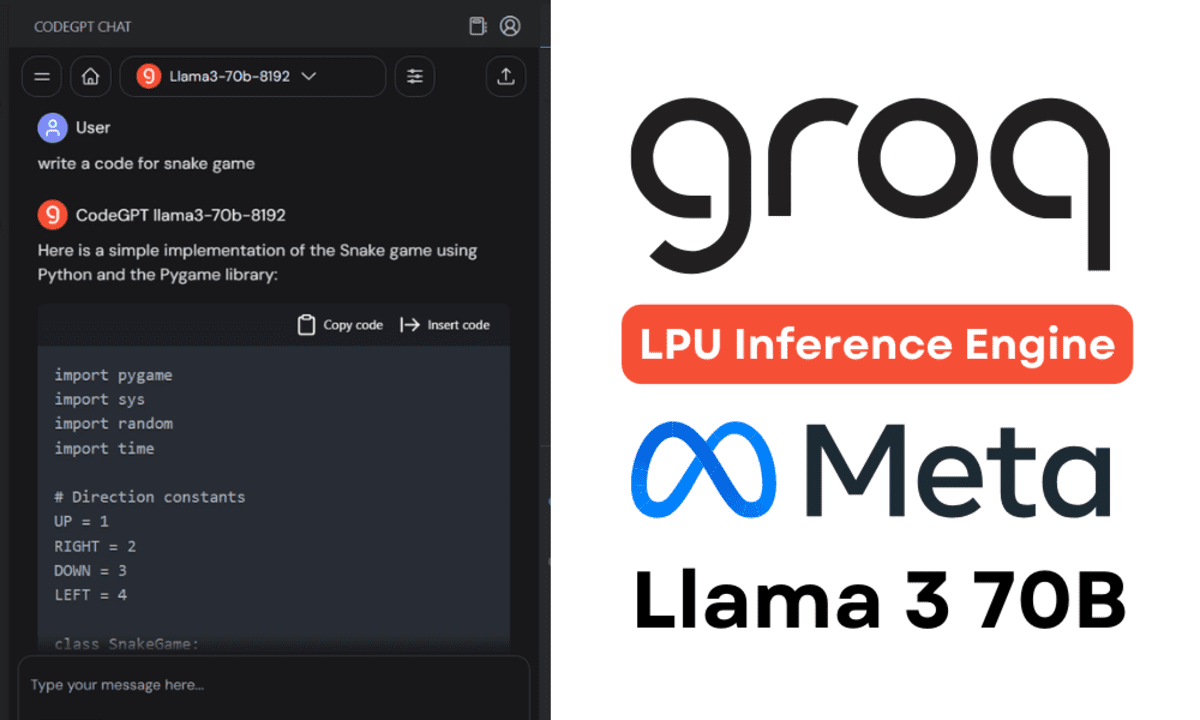

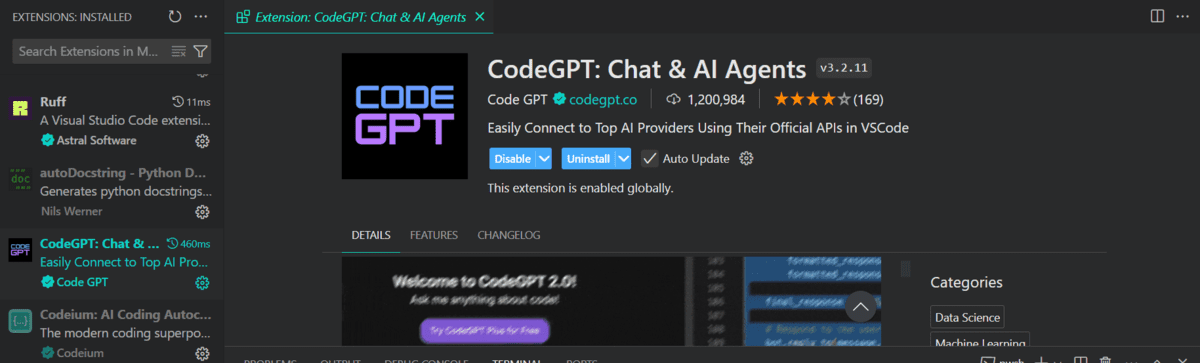

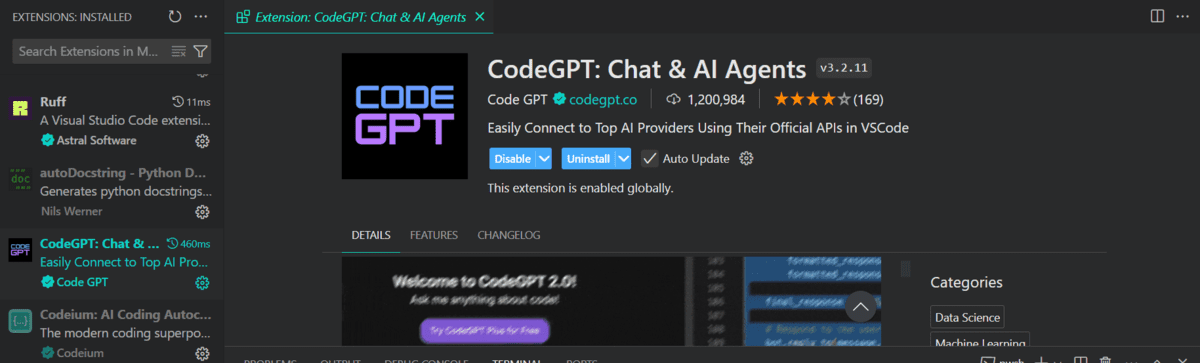

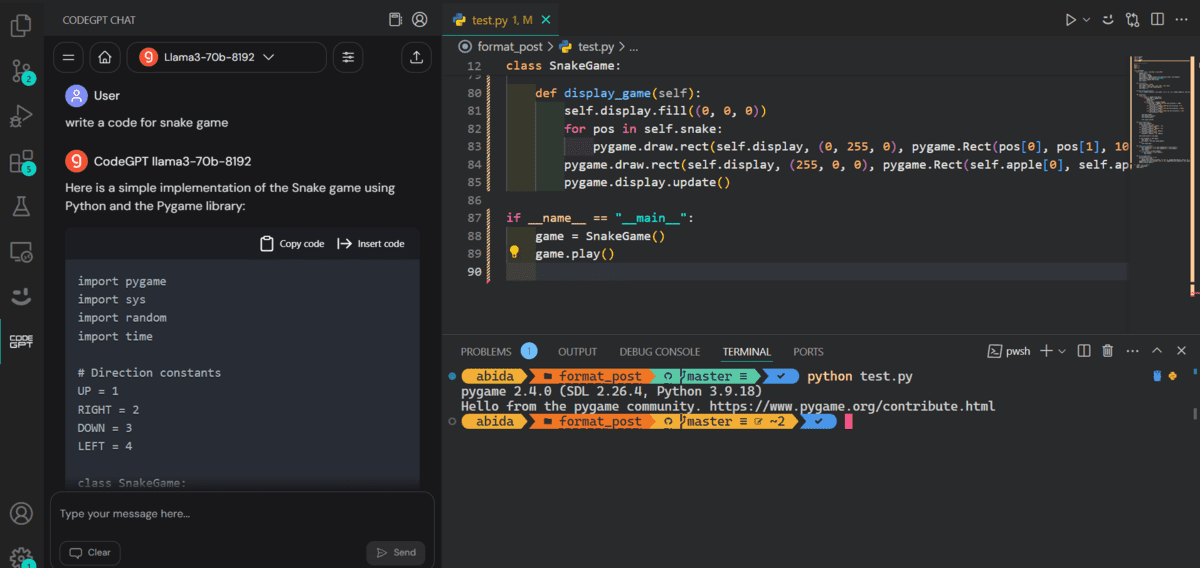

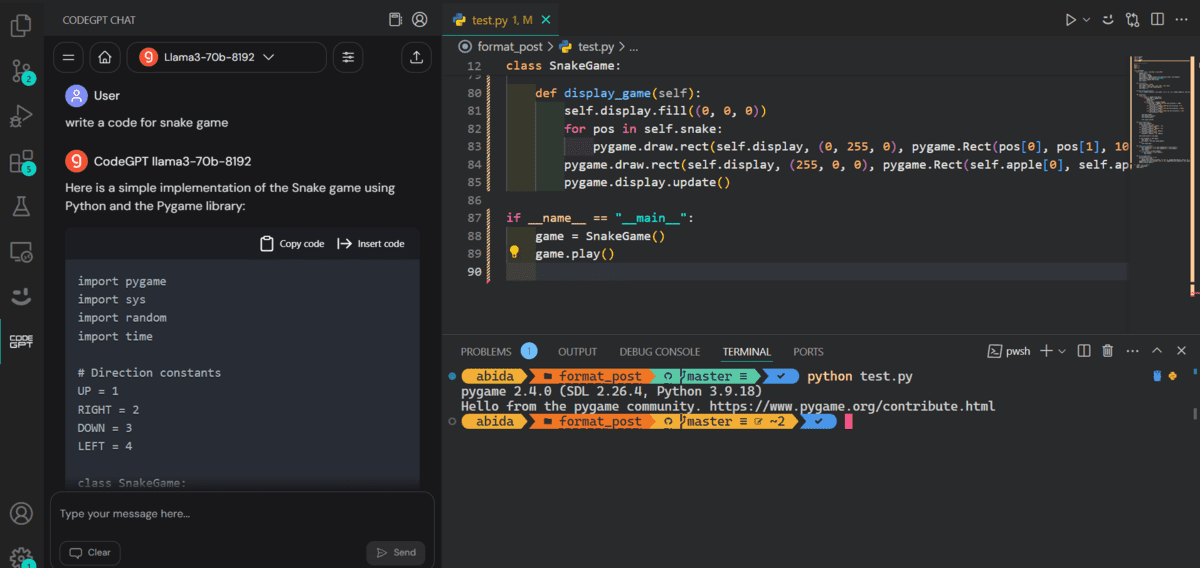

Subsequent, we’ll attempt pasting the identical API key into the CodeGPT VSCode extension and construct our personal free AI coding assistant.

Set up the CodeGPT extension by looking it within the extension tab.

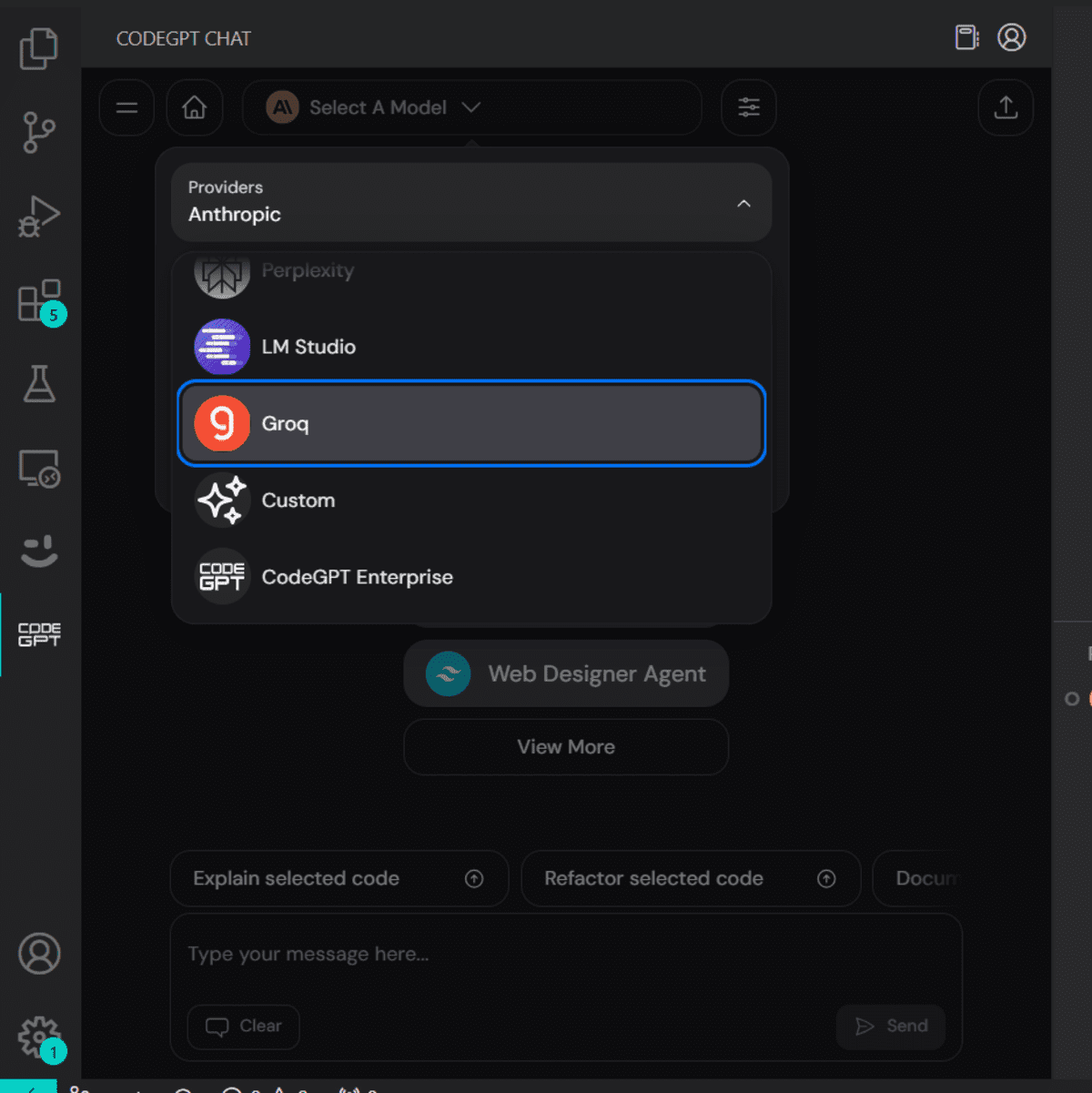

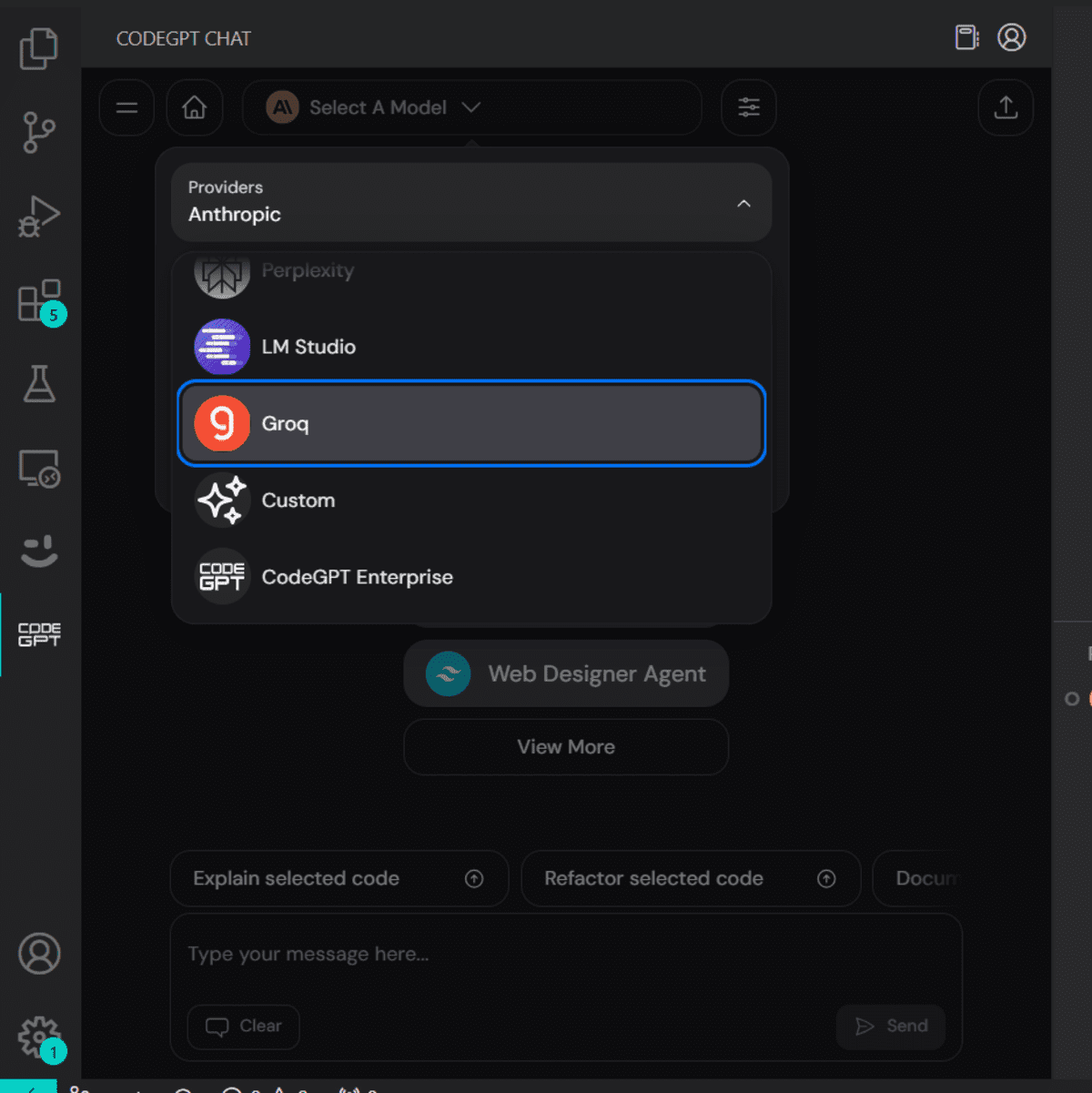

The CodeGPT tab will seem so that you can choose the mannequin supplier.

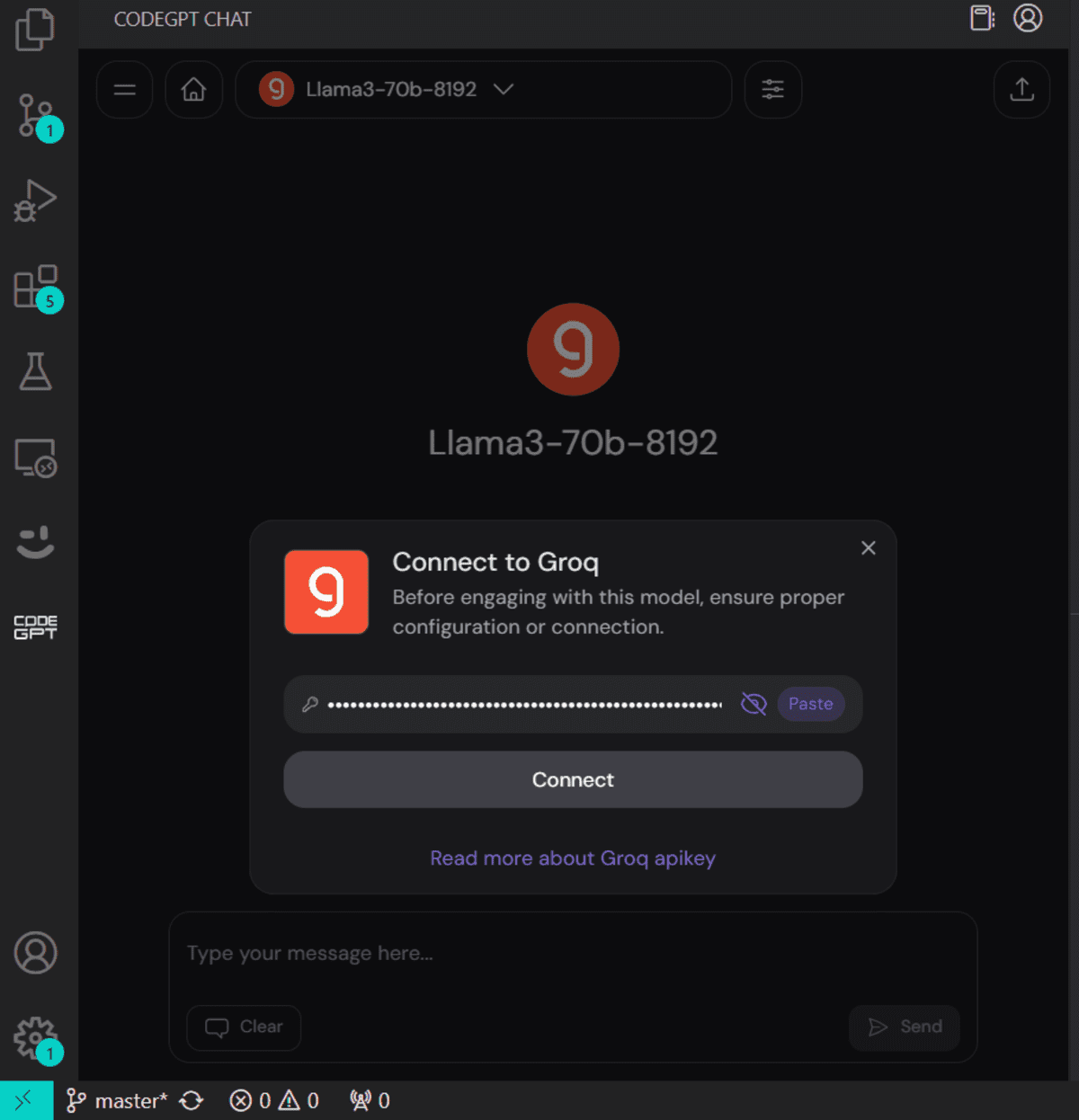

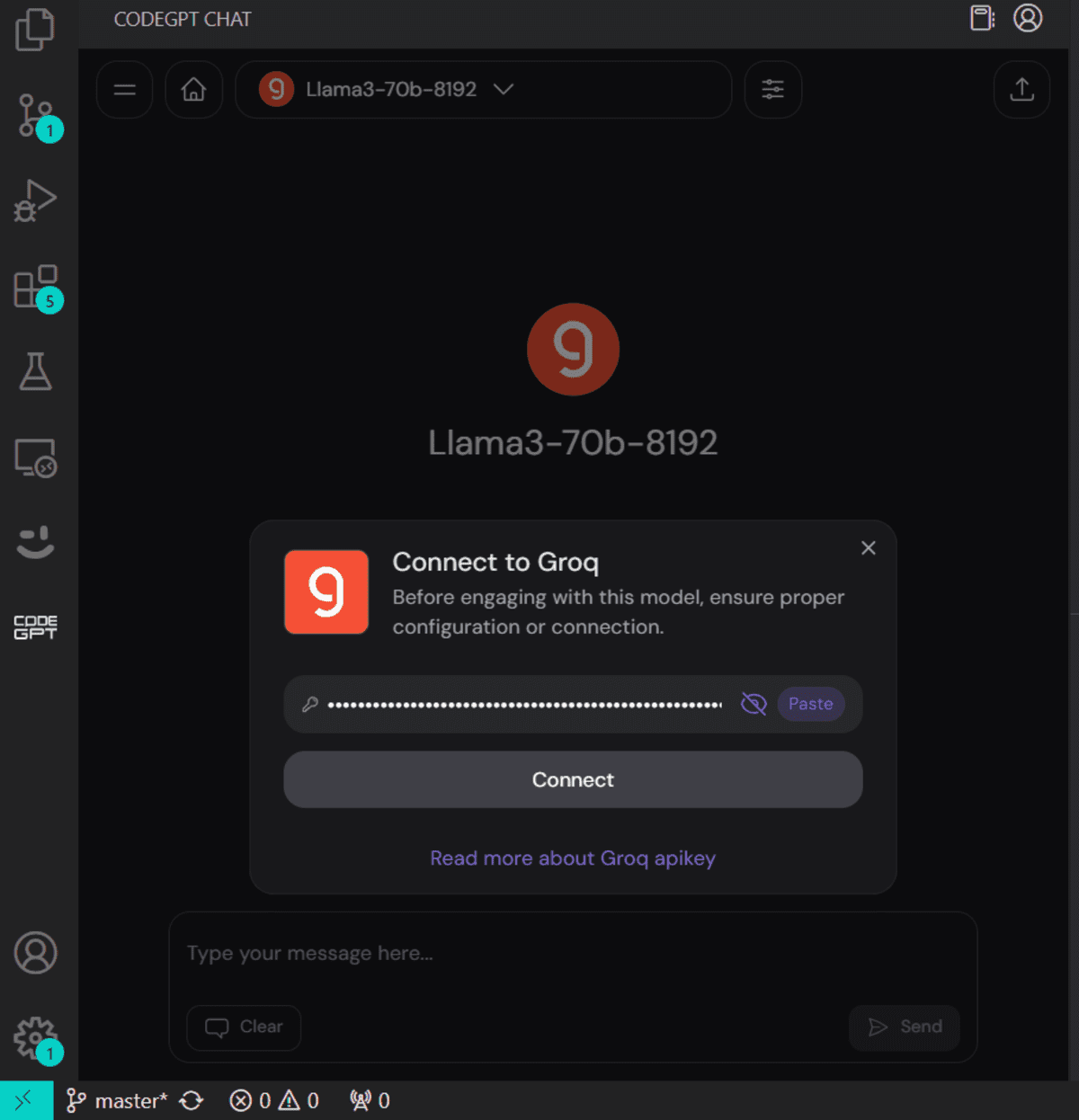

When you choose Groq as a mannequin supplier it should ask you to supply an API key. Simply paste the identical API key and we’re good to go. You’ll be able to even generate one other API key for CodeGPT.

We are going to now ask it to put in writing code for the snake recreation. It took 10 seconds to generate after which run the code.

Right here is the demo of how our snake recreation is working.

Study the Top five AI Coding Assistants and turn into an AI-powered developer and information scientist. Bear in mind, AI is right here to help us, not change us, so be open to it and use it to enhance your code writing.

Conclusion

On this tutorial, we discovered about Groq Inference Engine and methods to entry it regionally utilizing the Jan AI Home windows software. To prime it off, we have now built-in it into our workflow by utilizing CodeGPT VSCode extensions, which is superior. It generates responses in actual time for a greater growth expertise.

Now, most corporations will develop their very own Inference engineers to match Groq’s velocity. In any other case, Groq will take the crown in a couple of months.

Abid Ali Awan (@1abidaliawan) is a licensed information scientist skilled who loves constructing machine studying fashions. At the moment, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in know-how administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students battling psychological sickness.