In December, we announced a brand new suite of instruments to get Generative AI functions to manufacturing utilizing Retrieval Augmented Era (RAG). Since then, we now have seen an explosion of RAG functions being constructed by 1000’s of consumers on the Databricks Data Intelligence Platform.

At the moment, we’re excited to make a number of bulletins to make it straightforward for enterprises to construct high-quality RAG functions with native capabilities obtainable instantly within the Databricks Information Intelligence Platform – together with the Basic Availability of Vector Search and main updates to Mannequin Serving.

The Problem of Excessive High quality AI Functions

As we collaborated carefully with our clients to construct and deploy AI functions, we’ve recognized that the best problem is attaining the excessive normal of high quality required for buyer dealing with programs. Builders spend an inordinate quantity of effort and time to make sure that the output of AI functions is correct, secure, and ruled earlier than making it obtainable to their clients and infrequently cite accuracy and high quality as the largest blockers to unlocking the worth of those thrilling new applied sciences.

Historically, the first focus to maximise high quality has been to deploy an LLM that gives the very best high quality baseline reasoning and information capabilities. However, latest analysis has proven that base mannequin high quality is just one of many determinants of the standard of your AI utility. LLMs with out enterprise context and steerage nonetheless hallucinate as a result of they don’t by default have understanding of your information. AI functions can even expose confidential or inappropriate information in the event that they don’t perceive governance and have correct entry controls.

Corning is a supplies science firm the place our glass and ceramics applied sciences are utilized in many industrial and scientific functions. We constructed an AI analysis assistant utilizing Databricks to index 25M paperwork of US patent workplace information. Having the LLM-powered assistant reply to questions with excessive accuracy was extraordinarily vital to us so our researchers might discover and additional the duties they have been engaged on. To implement this, we used Databricks Vector Search to enhance a LLM with the US patent workplace information. The Databricks answer considerably improved retrieval velocity, response high quality, and accuracy. – Denis Kamotsky, Principal Software program Engineer, Corning

An AI Techniques Method to High quality

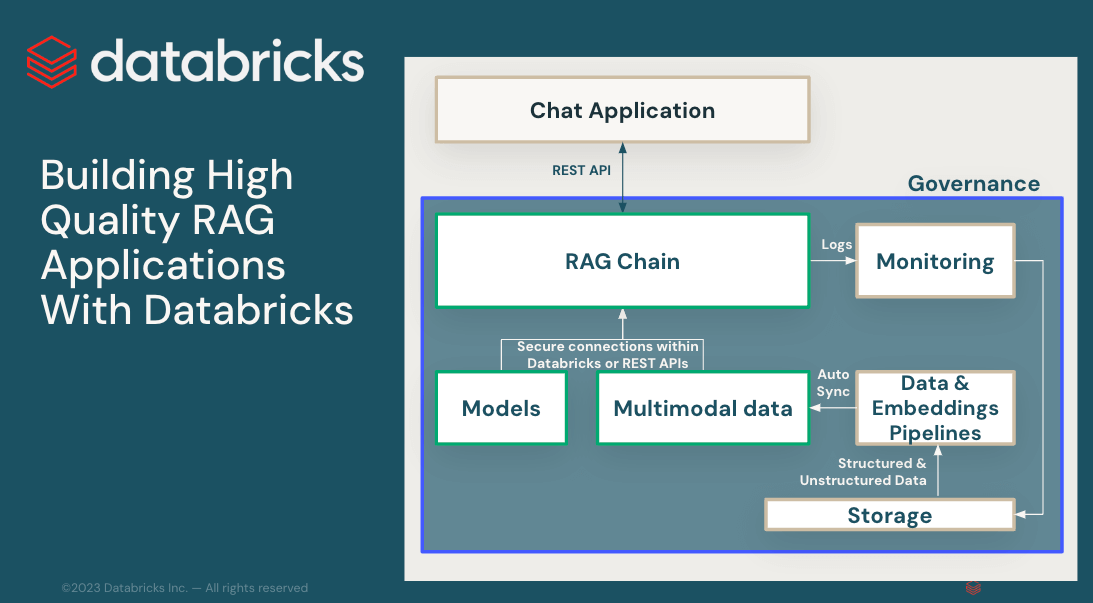

Attaining manufacturing high quality in GenAI functions requires a complete method involving a number of elements that cowl all points of the GenAI course of: information preparation, retrieval fashions, language fashions (both SaaS or open supply), rating, post-processing pipelines, immediate engineering, and coaching on customized enterprise information. Collectively these elements represent an AI System.

Ford Direct wanted to create a unified chatbot to assist our sellers assess their efficiency, stock, developments, and buyer engagement metrics. Databricks Vector Search allowed us to combine our proprietary information and documentation into our Generative AI answer that makes use of retrieval-augmented era (RAG). The combination of Vector Search with Databricks Delta Tables and Unity Catalog made it seamless to our vector indexes real-time as our supply information is up to date, while not having to the touch/re-deploy our deployed mannequin/utility. – Tom Thomas, VP of Analytics, FordDirect

At the moment, we’re excited to announce main updates and extra particulars to assist clients construct production-quality GenAI functions.

- Basic availability of Vector Search, a serverless vector database purpose-built for purchasers to enhance their LLMs with enterprise information.

- Basic availability within the coming weeks of Model Serving Foundation Model API which lets you entry and question state-of-the-art LLMs from a serving endpoint

- Main updates to Model Serving

- A brand new person interface making it simpler than ever earlier than to deploy, serve, monitor, govern, and question LLMs

- Assist for added state-of-the-art fashions – Claude3, Gemini, DBRX and Llama3

- Efficiency enhancements to deploy and question giant LLMs

- Higher governance and auditability with help for inference tables throughout all forms of serving endpoints.

We additionally beforehand introduced the next that helps deploy production-quality GenAI:

Over the course of this week, we’ll have detailed blogs on how you should use these new capabilities to construct high-quality RAG apps. We’ll additionally share an insider’s weblog on how we constructed DBRX, an open, general-purpose LLM created by Databricks.